Success Case

This case study highlights how the AI Agent, Pep, has performed in answering FAQs and providing guidance on the use of medical cannabis.

Luca Spektor - Growth Specialist

Jan 15, 2025

5

min read

Case Study: AI Chatbot for Medical Cannabis Education

Project Overview

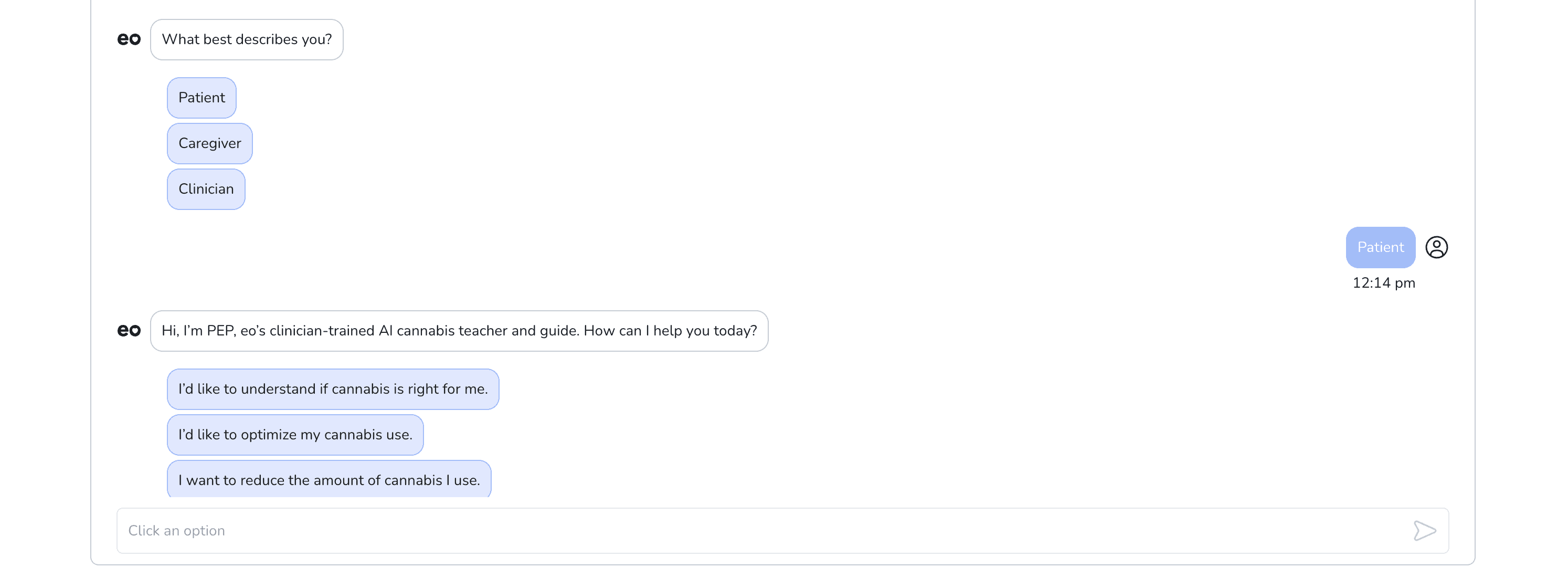

An AI chatbot, “PEP,” was deployed to support patients and healthcare consumers by answering common questions about medical cannabis. The goal was to help users navigate information about cannabis dosage, potential benefits, and potential risks, ultimately empowering them to make informed decisions. To evaluate the chatbot’s performance, four key metrics were tracked: Inaccuracy/Not Responded Queries, Drop-off, Hallucinations, and Escalate to a Human (doctor). Here's the project specifics.

Performance Metrics

Definitions

Inaccuracy / Not Responded Queries

Inaccuracy: The AI provides an incorrect or misleading answer.

Not Responded: The AI fails to reply altogether.

Drop-off

The conversation ends prematurely because the user abandons the chat.

Hallucinations

The AI “invents” facts or references unrelated to its knowledge base.

Escalate to a Human (Doctor)

The user actively requests to speak with a doctor, prompting a handover to a human expert.

Case Highlight: A Mock Example of a Successful Conversation

Note: The following conversation with “Mary” is a fictionalized (mocked) scenario based on typical user interactions. It illustrates a common way in which the chatbot effectively guides someone seeking information on medical cannabis.

Context: Mary, a 39-year-old patient, is exploring ways to manage her long-term anxiety, panic attacks, and related sleep issues. She already uses prescription medications but wishes to find out if cannabis could help supplement or replace them.

Initial Interaction

Mary opens the conversation: “Is cannabis right for me?”

PEP’s empathetic approach gathers preliminary information—age, existing medical conditions, and medication history—to ensure safe, relevant advice.

Medical Details

Mary describes her ongoing anxiety, panic attacks, and sleep disruption.

She currently takes prescription meds for anxiety and Wegovy for weight loss.

PEP verifies there are no significant contraindications like cardiovascular disease or pregnancy.

Cannabis Experience

Mary mentions she once consumed a high-THC edible that triggered a panic attack.

PEP explains that balanced formulations or lower-THC products may help avoid negative effects.

Recommended Guidance

PEP suggests she consider consulting her physician about starting with small doses, particularly CBD-rich formulas.

Mary thanks PEP, feeling more confident about exploring medical cannabis with proper supervision.

Outcome: Although purely illustrative, this conversation demonstrates how PEP effectively provides tailored, step-by-step guidance, addresses specific concerns, and encourages medical oversight.

Hallucination Example

Despite performing reliably most of the time, PEP registered one hallucination event:

User: “What is PEP?”

Chatbot: “PEP stands for Post-Exposure Prophylaxis (a treatment for HIV exposure) ...”

In this instance, the AI mistakenly interpreted the acronym “PEP” as Post-Exposure Prophylaxis rather than its own name (“PEP,” the AI chatbot for cannabis education). This highlights an isolated error caused by the chatbot’s difficulty disambiguating identical acronyms from different medical contexts.

Analysis of Results

Inaccuracy/Not Responded Queries (3.93%)

Most inaccuracies came from ambiguous questions or incomplete user information. Even so, under 4% indicates a strong baseline of correctness.

Drop-off (4.59%)

Roughly one in twenty conversations ended prematurely without resolution. This suggests the majority of users engaged substantially, finding the chatbot’s answers helpful.

Hallucinations (0.22%)

Only one confirmed fabrication underlines that, while the AI is largely reliable, ongoing improvements in domain tuning are crucial to prevent misinterpretation of acronyms or medical jargon.

Escalate to a Human (0.22%)

Once a user explicitly requested to speak to a doctor, the conversation was appropriately transferred. The low frequency implies that most queries remained within the chatbot’s scope.

Conclusion

Overall, PEP demonstrated a high level of efficacy in addressing user questions about medical cannabis. In a majority of sessions, users received accurate, helpful information without requiring escalation to a human clinician. A single hallucination event underscores the importance of continuous improvement in domain-specific handling to prevent confusing or erroneous responses. Nonetheless, this pilot project successfully showcased how an AI chatbot can serve as a scalable and valuable tool for educating individuals on medical cannabis, supporting them in making more informed health decisions.

GET IN TOUCH